Have you noticed that whenever you are searching for products on amazon you can see that it shows the frequently bought together items? This feature on amazon is an classic example of Association rule learning in machine learning and the most basic algorithm for this association rule based learning is apriori algorithm.

There are various types of unsupervised machine learning techniques in ML like — clustering, dimensionality reduction, anomaly detection and association rules. Apriori algorithm is an association rule based machine learning algorithm. Like all other unsupervised techniques this also doesn’t require you to train the model rather the system infers a function or find a pattern in the data to basically describe an hidden structure in your unlabelled dataset.

|

| Fig. Apriori Algorithm |

Apriori algorithm refers to an algorithm that is used in mining frequent products sets and relevant association rules. To put it simply, the apriori algorithm is an association rule leaning that analyzes that people who bought product A also bought product B. What association based algorithms do, is that basically go through the entire data to find out if there any unique and different patterns in the data and hence apriori is also called frequent pattern mining.

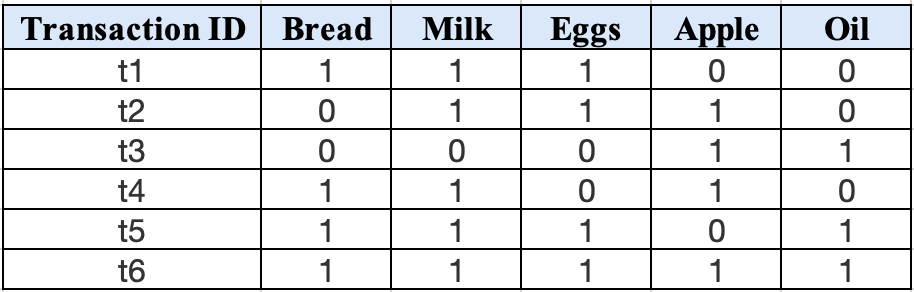

The apriori is generally run on a very large database with a lot of transactions of various different products. So lets take an example, suppose you own a supermarket and sell various items there and want to know which are the items that are bought together and hence want to see how you can put a sale or clear your stock or any other business and profit based decision you want to make. So your dataset you are working with may look like this —

|

| Fig. Transactions in Supermarket |

So, here as you can see that the supermarket has the following items, product set P = {Bread, Milk, Eggs, Apple, Oil} and the the transactions show what people have bought.

Few key terms you should know here are -

- Itemset — the items clubbed together like {Bread, Milk, Eggs} form an itemset and a k-itemset contains k items within it.

- Support Count — The frequency count of the occurring of a particular itemset becomes the support count for e.g., σ{Bread, Milk, Eggs} = 3

- Support — It is the fraction of transactions that contain the itemset for e.g., s{Bread, Milk, Eggs} = 3/6

- Frequent itemset — It is the itemset that has a support more than or equal to the minimum support, minimum support is defined by the user using the algorithm.

- Association rule — It just the implication expression after the entire process that is of the form X → Y, e.g., {Bread, Milk} → {Eggs}

- Confidence — This measures how often items in Y appear in transactions that contain X.

- Lift — This measures how many times more often X and Y occur together than expected if they where statistically independent. This basically is measuring the dependent or correlated events.

Formula for support, confidence and lift—

Support(A) = (No. of transactions in which A appears) / (Total no. of transactions)

Confidence(A → B) = Support(A U B) / Support(A)

lift(A → B) = Support(A,B) / {Support(A).Support(B)}

Association rule mining concepts —

- Given a set of transactions, T the goal of association rule mining is to find rules having support ≥ minimum support threshold and confidence ≥ minimum confidence threshold

- So next it is basically a brute-force approach which starts by listing all possible association rules then computing confidence and support for each of them and then pruning the rules that fail the minimum support and minimum threshold

All this has a lot of calculations and hence this is computationally prohibitive approach, but for this blog we will be using this approach itself.

Lets first understand the Apriori principle — if an itemset is frequent then all its subsets must also be frequent. Which also means that — The subsets of an infrequent item set must be infrequent.

So, now lets solve this problem to get a basic understanding of apriori —

Lets generate the association rules for the above figure transactions considering the minimum support at 50% and minimum confidence at 50% using basic brute force apriori algorithm.

Step 1 —

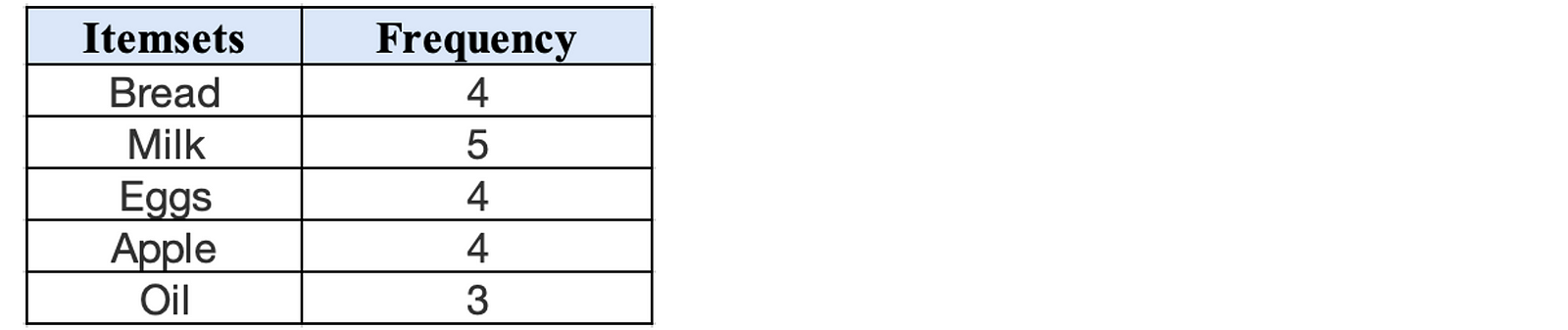

Generate a frequency table for all items, basically the item and the number of transactions the item occurs in.

Step 2 —

Create a pair of items for all the above items creating a set of two items and then their frequency of occurring in the transactions.

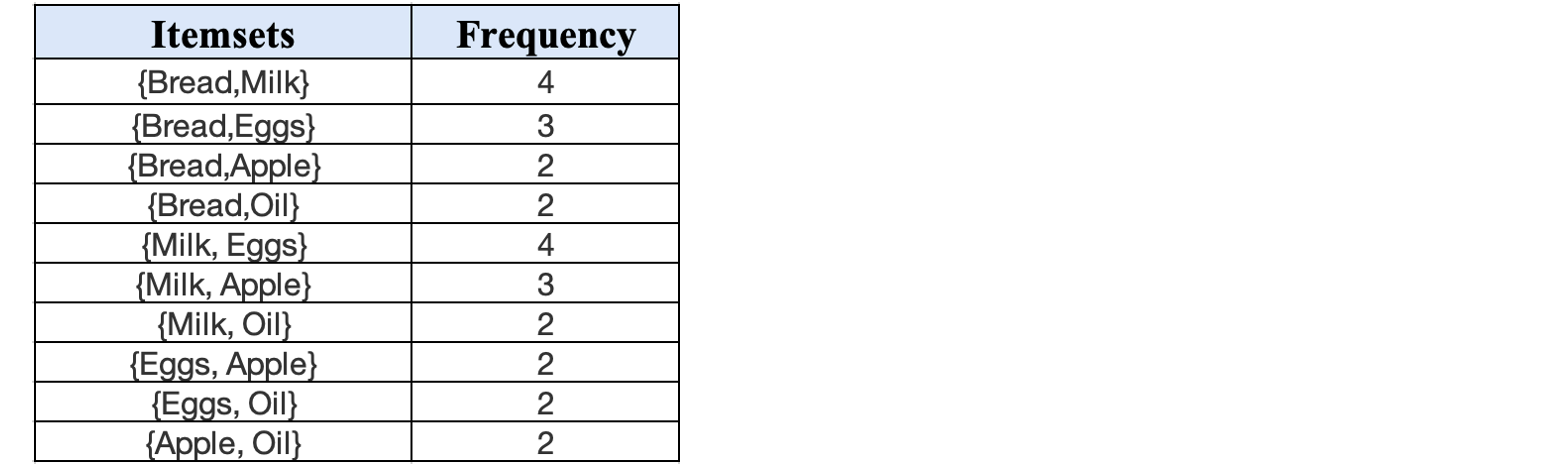

Step 3 —

Check the count for the frequency. Since in our case we are taking a 50% minimum support threshold that would mean 50% of total transactions which is 3. So our minimum count is 3. So remove all below 3 and keep the rest.

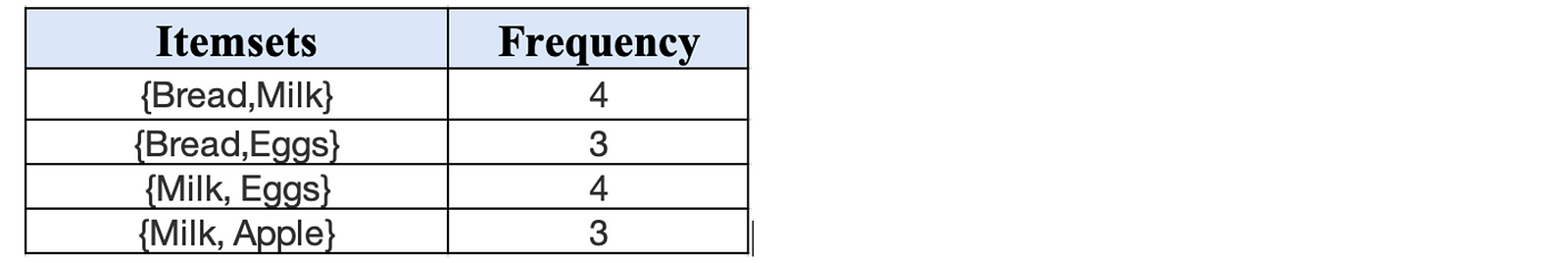

Step 4 —

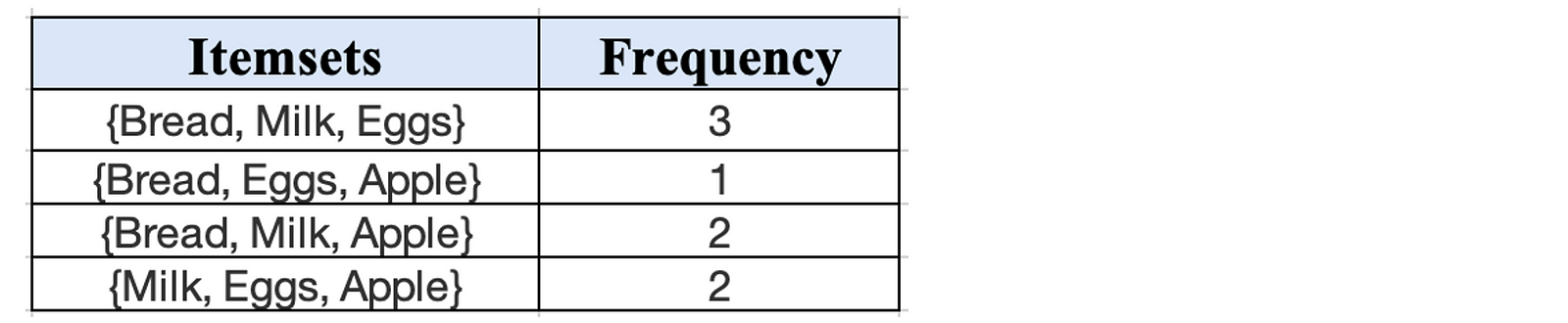

Now if you want to generate the frequently bought together for 3 items repeating similarly we would have the itemsets and the frequency as given in the frequency table below.

If you see here, we generated the frequent itemsets from the above itemsets possible and then found the frequency count and our support is set at 3. So, the most frequently occurring 3 itemset combination of items is {Bread, Milk, Eggs}.

References

- https://www.javatpoint.com/apriori-algorithm-in-machine-learning

- https://www.geeksforgeeks.org/apriori-algorithm/

- https://www.analyticsvidhya.com/blog/2022/01/underrated-apriori-algorithm-based-unsupervised-machine-learning/

- https://www.javatpoint.com/apriori-algorithm

- https://medium.com/analytics-vidhya/apriori-algorithm-in-association-rule-learning-9287fe17e944